本文深入探讨了百度蜘蛛池出租的相关问题,详细介绍了设置蜘蛛池的简单性,并提供了全面的指南。无论是初学者还是专业人士,都能从中获得实用的信息和建议。

In the vast world of web scraping, the concept of a spider pool has become increasingly popular. A spider pool, also known as a crawling pool, is a collection of web crawlers working together to extract data from the internet. Setting up a spider pool might seem daunting at first, but with the right guidance, it can be a straightforward process. In this article, we will explore whether setting up a spider pool is simple and provide a comprehensive guide to help you get started.

Understanding the Basics of a Spider Pool

Before diving into the simplicity of setting up a spider pool, it's essential to understand what it entails. A spider pool consists of multiple spider instances that are designed to navigate through websites, follow links, and extract data. These spiders can be either single-threaded or multi-threaded, depending on the complexity of the task and the website's structure.

Is Setting Up a Spider Pool Simple?

The answer to this question largely depends on several factors, including your technical expertise, the tools you choose, and the specific requirements of your project. For those with a basic understanding of programming and web scraping, setting up a spider pool can be relatively simple. However, for beginners, it might seem challenging due to the complexity involved in handling various web technologies and potential pitfalls like legal and ethical considerations.

The Simple Steps to Setting Up a Spider Pool

1、Choose a Programming Language: The first step is to select a programming language that you are comfortable with. Python, Java, and Ruby are popular choices for web scraping due to their extensive libraries and community support.

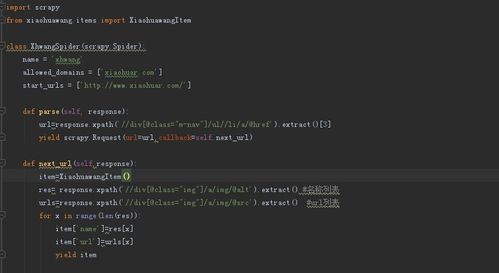

2、Select a Web Scraping Framework: Once you've chosen a programming language, you'll need to select a web scraping framework. Python's Scrapy, Java's Jsoup, and Ruby's Mechanize are some of the most widely used frameworks.

3、Design Your Spider: With the framework in place, design your spider. A spider is responsible for navigating through websites, following links, and extracting data. You'll need to define the rules for the spider, such as the domains it should crawl, the pages it should skip, and the data it should extract.

4、Implement Error Handling and Logging: A robust spider pool should have error handling and logging mechanisms to ensure that it can recover from errors and provide insights into its performance.

5、Configure the Spider Pool: Set up the number of spiders in your pool. The number of spiders will depend on the resources available and the scale of your project. It's essential to balance the load and avoid overloading the servers you're scraping.

6、Respect Robots.txt: Always adhere to the website's robots.txt file, which specifies the parts of the site that should not be accessed by crawlers. Ignoring this can lead to legal issues and IP bans.

7、Test Your Spider Pool: Before deploying your spider pool, thoroughly test it to ensure it works as expected. Check for data accuracy, performance issues, and any potential legal or ethical concerns.

8、Deploy and Monitor: Once everything is tested and approved, deploy your spider pool. Monitor its performance and make adjustments as needed.

Challenges and Considerations

Legal and Ethical Issues: Ensure that your web scraping activities comply with the website's terms of service and applicable laws. Avoid scraping personal data or using aggressive scraping techniques that could harm the website's performance.

Rate Limiting: Be mindful of the rate at which your spiders are crawling to avoid overwhelming the server.

Data Extraction Complexity: Some websites are designed to be difficult to scrape, with complex JavaScript and dynamic content. In such cases, you might need to use additional tools or techniques, like browser automation or headless browsers.

Maintenance: Regularly update your spider pool to handle changes in the website's structure or to improve performance.

In conclusion, setting up a spider pool can be simple if you have the right knowledge and tools. By following the steps outlined above and being mindful of the challenges and considerations, you can create an efficient and effective spider pool to extract the data you need. Remember, the key to a successful spider pool lies in planning, testing, and continuous improvement.